Recently I was asked to join the recruitment process and onboarding of new members. As the team and our project grow both in size and complexity, our document to hand on projects is complicated and only work in certain operating system. So to make sure the onboarding process is fast and reproducible, I have to come up with a new plan to create an isolate enviroment for coding and less learning curve as possible.

My goal

An isolate enviroment where every member get their own resource and custom preinstall package

Our project in Jobhopin combines multiple languages (Rust, Python, …) and the process of creating virtualenv is quite tedious with multiple attempts to make it work. Usually, It took 2-3 weeks for newcomers to learn about our current projects and work effectively

Why not use containers image?

Firstly I encourage the team to use Docker and docker-compose to code and debug projects but our team has both engineer and scientist members. The science team find it hard to debug in docker and took a lot of time for new members to learn and make use of docker’s image. Furthermore I wanted to mimic a real production machine that the member has root access to – wanted folks to be able to set sysctls, install new packages, make iptables rules, configure networking with ip, run perf, basically literally anything with strong isolation.

Why not use virtual machine?

I’ve tried some VM vendors (Qemu and Vmware) to create per VM per member but too many problems in the process:

- VM boosting time is slow plus the snapshot size is too large

- Lack of API and the snapshot VM have to be manual created without any reproduce code

I want our members only need to provide their credentials with custom VM size and instantly launch a fresh virtual machine.

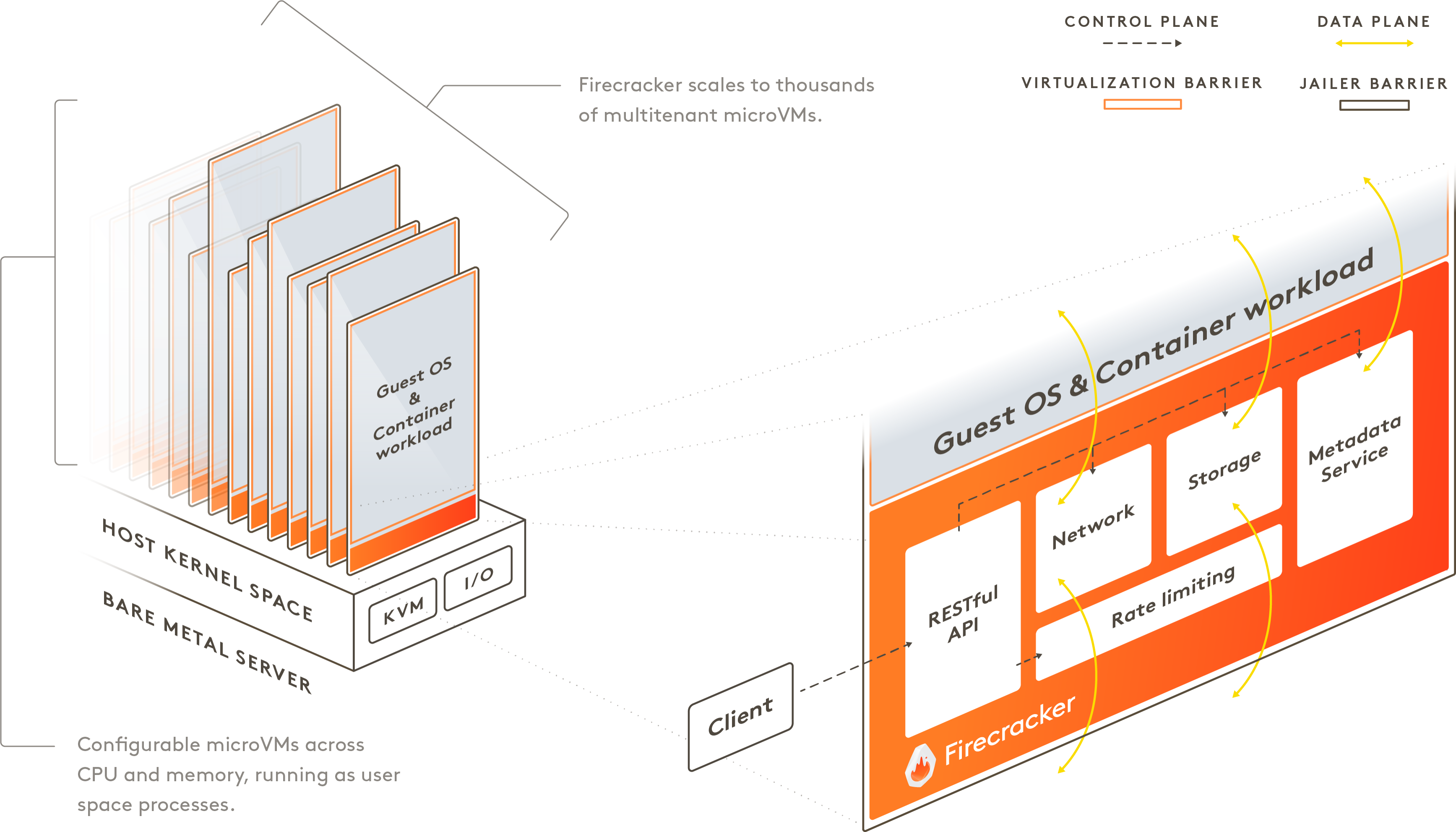

Firecracker can start a VM in less than a second with base OCI container

Initially when I read about Firecracker being released, I thought it was just a tool for cloud providers to use that provide security rather than bare docker, but I didn’t think that it was something that I could directly use it to create a dev VM.

After a few reading information, I just impress with how fast and convenient Firecracker is in boosting VM

Some comperations between Firecracker and QEMU

Firecracker integrates with existing container tooling, making adoption rather painless and easy to use. I choose to use Ignite that CLI command is very similar to docker

ignite run instead of docker runHow to use Firecracker with ignite

Install ignite and start a fresh VM is very simple, there’s basically 3 steps:

Step 1: Check your system is enable KVM virtualization and install Ignite in here Installing-guide

Step 2: Create a VM sample config.yaml

Step 3: Start your VM server under 125 ms

It takes <= 125 ms to go from receiving the Firecracker InstanceStart API call to the start of the Linux guest user-space /sbin/init process

Wolla 🎉 🎉 🎉 you’ve succeedfully created a new VM. To list the running VMs, enter:

Once the VM is booted, it will have its network configured and will be accessible from the host via password-less SSH and with sudo permissions

SSH into the VM

Via ignite cli

To exit SSH, just quit the shell process with exit.

Via ssh cli and rsa key

add your public key to ~/.ssh/authorized_keys in new boosted VM or update config and create new VM with default path to public key

and then ssh to your VM

Secure bastion host

Final wrapping with bastion host technique to fully secure ssh to VM host

~/.ssh/config

Add the config above to your ssh folder and run ssh haiche-vm to access VM host from your client

How I extend container to reduce repeatable setup process

After successfully creating VM, mostly I will install conda and some packages to run my project. But I don’t want to repeatedly install conda and create a new environment each time I create VM. Here is my step to extend the base Ubuntu image and use it to create a better VM experience

Dockerfile

miniconda-vm.yaml

Here are some tricky parts, the current ignite doesn’t support local docker image build. I have to push the image to the public register Docker Hub to successfully import ignite’s image. To start a new VM

If you use a private registry such as ECR, run the command above with --runtime=docker to pull the private registry

Test our new VM with conda environment

I liked the configuration file approach so far and it is easier to be able to see everything all in one place. Now the member simply provides the config file and public key to create a fresh VM with all needed environments in instant

Cloud supports nested virtualization

Another question I had in mind: “ok, where am I going to run these Firecracker VMs in production?“. The funny thing about running a VM in the cloud is that cloud instances are already VMs. Running a VM inside a VM is called “nested virtualization” and not all cloud providers support it – for example, AWS only supports nested virtualization in Bare-metal instances which are ridiculously high prices.

GCP supports nested virtualization but not on default, you have to enable this feature in creating VM section. DigitalOcean support nested virtualization on default even on their smallest droplets

Some afterthought

A few things still stuck in my mind with this approach:

-

Currently firecracker doesn’t support snapshot restore but will support in near future https://github.com/firecracker-microvm/firecracker/issues/1184

-

Can’t easy upgrade base image like

docker pull. I was dealing with this by making a copy every time, but that’s kind of slow and it felt really inefficient. But there’s some solution online that I will try later Device mapper to manage firecracker images -

I don’t know if it’s possible to run graphical applications in Firecracker yet

-

Firecracker with Kubernetes is a new thing but I don’t find it appealing cause using Pod to group containers is already fast and secure. Some people gave me this useful thread discuss about why aren’t they compatible yet

I often hear people ask why Kubernetes and Firecracker (FC) can’t just be used together. It seems like an intuitive combination, Kubernetes is popular for orchestration, and Firecracker provides strong isolation boundaries. So why aren’t they compatible yet? Read on 🧵

— Micah Hausler (@micahhausler) March 13, 2020

Here are some links I found useful when researching about Firecracker:

-

How AWS Firecracker works: a deep dive that demonstrates some of the concepts with a tiny version of Firecracker

-

AWS Fargate and Lambda was back by Firecracker serverless computing

-

Comparing other isolation technique Sandboxing and Workload Isolation

Комментарии